A bit of history

More than a decade ago, Qt solely focused on OpenGL to deliver hardware accelerated graphics. That was the time of QGLWidget to use OpenGL in QWidget applications. The second generation of QtQuick has been built on an OpenGL scenegraph to render scenes written in QML.

But the times have changed: OpenGL could never truly succeed on Windows; instead Microsoft has further developed and pushed Direct3D. Apple introduced the API Metal as the canoncial way to render graphic scenes on its operating systems while deprecating OpenGL at the same time. The Khronos consortium – the same consortium that is responsible for OpenGL – unveiled Vulkan in the year 2016. All of the new graphics APIs have in common that theyoptimize GPU utilization more effectively by significantly reducing overhead.

Meet RHI

- OpenGL ES

- Metal

- Direct3D 11

- Direct3D 12

- Vulkan

The API has been available since the late Qt5 days, and it has become publicly accessible starting from Qt 6.6. As RHI is very low level, even drawing something as simple as a single triangle requires some amount of code. If you just want to add a shader to your QtQuick programm or light and display a 3D object, you may consider other solutions (like a ShaderEffect in QtQuick or Qt 3D for a 3D scene). But if you need full control to implement your ideas, RHI may be a helpful API in your toolbox.

For example, we used RHI to implement the RiveQtQuickPlugin.

This blog post will guide you step by step through a basic demo application: We will create a QWindow and initialize a rendering pipeline to render some basic geometry to it.

The building blocks

In its most basic form, an application needs the following building blocks to render something into a window:

At initialization time:

- An instance of a QRhi object: This is the basic class that helps to setup everything:

- A QRhiSwapChain: A swap chain represents the connection between the API and the window surface.

- Some buffers to store the geometries and inputs to the shaders. When using RHI, you use the class QRhiBuffer to store this kind of data. A QRhiResourceUpdateBatch is used to move the data to memory accessible by the GPU

- A rendering pipeline that defines what to do with the aforementioned buffers. A pipeline consists of shader stages and information about how the vertex data is structured. You also need to define what to do with the rendering result. In detail, the following must be considered

- To define how the geometry is laid out, use QRhiVertexInputLayout: Does the geometry buffer just store vertex positions? Does it also include texture coordinates and per vertex colors? Is the data distributed over multiple buffers or stored in one buffer? This defines how the geometry buffer is used as the input for the vertex shader (see below).

- You need at least two shader stages: A vertex shader and a fragment shader. The vertex shader takes the vertices and transforms them to so-called clip space positions. The fragment shader determines how the primitive is filled.

- You need to inform the pipeline about uniforms and textures. Uniforms are inputs that remain valid throughout all invocations of the shader (for example screen resolutions, time steps, etc.). This is done with QRhiShaderResourceBindings

- Last but not least the pipeline needs to know something about where to render into. In our example, the triangle is rendered via the swap chain to the window. But you can also render to an offscreen texture. Besides one or multiple color buffers (the image that is rendered), also a depth and/or stencil buffer may be attached to the target. This is where QRhiRenderPassDescriptor comes into place. In RHI the descriptor is created from the swap chain or the render target.

During rendering:

- Rendering of a frame is done between beginFrame() and endFrame()

- QRhiCommandBuffer: A command buffer needs to be created during rendering: Modern architectures collect rendering commands to move them to the GPU in one batch. For architectures that don’t support command buffers, RHI emulates the behavior

- Rendering of a single pass is done between beginPass() and endPass(). Hereby, rendering to a frame may consist of multiple passes. For example you may decide to render different parts of your scene to different offscreen textures and blend them together in a last pass.

- Set a graphics pipeline you configured during initialization

- Set the viewport size

- Set your input geometry

- Call draw() to render the geometry

So much for the theory. The source code in the next section demonstrates how all of this fits together by drawing a triangle to the screen:

Walkthrough

We begin by implementing the main.cpp:

#include <QGuiApplication>

#include <QLoggingCategory>

#include "hellotriangle.h"

int main(int argc, char **argv)

{

QGuiApplication app(argc, argv);

QLoggingCategory::setFilterRules(QLatin1String("qt.rhi.*=true"));

HelloTriangle window;

window.resize(600, 600);

window.setTitle(QCoreApplication::applicationName());

window.show();

int retVal = app.exec();

if (window.handle()) {

window.releaseSwapChain();

}

return retVal;

} Here, we just create a QGuiApplication and the HelloTriangle window. When the logging category ist set to the qt.rhi domain, Qt prints some debug messages about the graphics driver on the console. The HelloTriangle class is implemented below:

First let’s have a look into the header file:

#pragma once

#include <QWindow>

#include <QVector>

#include <private/qrhi_p.h>

class QExposeEvent;

class QEvent;

class HelloTriangle : public QWindow

{

Q_OBJECT

public:

HelloTriangle(QWindow *parent = nullptr);

~HelloTriangle();

void releaseSwapChain();

public slots:

void render();

protected:

void exposeEvent(QExposeEvent *exposeEvent) override;

bool event(QEvent *event) override;

private:

void maybeInitialize();

QShader loadShader(const QString &filename);

bool m_isInitialized { false };

bool m_maybeResizeSwapChain { false };

QRhi *m_rhi { nullptr };

QRhiSwapChain *m_swapChain { nullptr };

QRhiRenderPassDescriptor *m_renderPassDescriptor { nullptr };

QRhiResourceUpdateBatch *m_initialUpdates { nullptr };

QRhiBuffer *m_vertexBuffer { nullptr };

QRhiShaderResourceBindings *m_shaderResourceBindings { nullptr };

QRhiGraphicsPipeline *m_renderingPipeline { nullptr };

QVector<QRhiResource *> m_releasePool;

}; #include "hellotriangle.h"

#include <QCoreApplication>

#include <QExposeEvent>

#include <QPlatformSurfaceEvent>

#include <QEvent>

#include <QFile>

#include <QOffscreenSurface>

#if QT_VERSION >= QT_VERSION_CHECK(6, 6, 0)

#include <QRhi>

#else

#include <private/qrhigles2_p.h>

#endif

static float vertexData[] = {

-0.5f, -0.5f, 0.0f,

0.5f, -0.5f, 0.0f,

0.0f, 0.5f, 0.0f

};

HelloTriangle::HelloTriangle(QWindow *parent)

: QWindow(parent)

{

setSurfaceType(OpenGLSurface); // 1

}

HelloTriangle::~HelloTriangle()

{

qDeleteAll(m_releasePool); // 2

m_releasePool.clear();

delete m_rhi;

m_rhi = nullptr;

}

QShader HelloTriangle::loadShader(const QString &filename) // 3

{

QFile shaderFile(filename);

bool success = shaderFile.open(QIODevice::ReadOnly);

if (success) {

return QShader::fromSerialized(shaderFile.readAll());

}

qWarning() << "Cannot open shader file:" << filename;

return QShader();

}

void HelloTriangle::exposeEvent(QExposeEvent *exposeEvent) // 4

{

if (isExposed()) {

m_maybeResizeSwapChain = true;

maybeInitialize();

render();

}

return QWindow::exposeEvent(exposeEvent);

}

bool HelloTriangle::event(QEvent *event) // 5

{

switch (event->type()) {

case QEvent::UpdateRequest:

render();

break;

case QEvent::PlatformSurface:

if (static_cast<QPlatformSurfaceEvent *>(event)->surfaceEventType() == QPlatformSurfaceEvent::SurfaceAboutToBeDestroyed) {

releaseSwapChain();

}

break;

}

return QWindow::event(event);

}

void HelloTriangle::maybeInitialize() // 6

{

if (m_isInitialized) {

return;

}

const int sampleCount = 1;

// init RHI and swap chain to render into a window

QRhiGles2InitParams params; // 7

params.window = this;

QOffscreenSurface *fallbackSurface = QRhiGles2InitParams::newFallbackSurface();

params.fallbackSurface = fallbackSurface;

m_rhi = QRhi::create(QRhi::OpenGLES2, ¶ms);

m_swapChain = m_rhi->newSwapChain(); // 8

m_releasePool << m_swapChain; // 9

m_swapChain->setWindow(this); // 10

m_swapChain->setSampleCount(sampleCount);

m_renderPassDescriptor = m_swapChain->newCompatibleRenderPassDescriptor(); // 11

m_releasePool << m_renderPassDescriptor;

m_swapChain->setRenderPassDescriptor(m_renderPassDescriptor);

m_swapChain->createOrResize();

// init vertex data

m_initialUpdates = m_rhi->nextResourceUpdateBatch(); // 12

m_vertexBuffer = m_rhi->newBuffer(QRhiBuffer::Immutable, QRhiBuffer::VertexBuffer, sizeof(vertexData)); // 13

m_vertexBuffer->create();

m_releasePool << m_vertexBuffer;

m_initialUpdates->uploadStaticBuffer(m_vertexBuffer, 0, sizeof(vertexData), vertexData);

// define vertex layout // 14

QRhiVertexInputLayout vertexInputLayout;

vertexInputLayout.setBindings({ { 3 * sizeof(float) } });

vertexInputLayout.setAttributes({

{ 0, 0, QRhiVertexInputAttribute::Float3, 0 },

});

// there must be a QRhiShaderResourceBindings

// the hello triangle example does not have any uniforms and textures as shader inputs, so we leave it empty

m_shaderResourceBindings = m_rhi->newShaderResourceBindings(); // 15

m_releasePool << m_shaderResourceBindings;

m_shaderResourceBindings->create();

// init rendering pipeline

m_renderingPipeline = m_rhi->newGraphicsPipeline(); // 16

m_releasePool << m_renderingPipeline;

m_renderingPipeline->setShaderStages({

{ QRhiShaderStage::Vertex, loadShader(QLatin1String(":/shaders/hellotriangle.vert.qsb")) },

{ QRhiShaderStage::Fragment, loadShader(QLatin1String(":/shaders/hellotriangle.frag.qsb")) }

});

m_renderingPipeline->setTopology(QRhiGraphicsPipeline::Triangles);

m_renderingPipeline->setVertexInputLayout(vertexInputLayout);

m_renderingPipeline->setShaderResourceBindings(m_shaderResourceBindings);

m_renderingPipeline->setRenderPassDescriptor(m_renderPassDescriptor);

m_renderingPipeline->create();

// request next frame

m_isInitialized = true;

}

void HelloTriangle::render()

{

if (!isExposed() || !m_isInitialized || !m_swapChain) {

return;

}

if (m_swapChain->currentPixelSize() != m_swapChain->surfacePixelSize() || m_maybeResizeSwapChain) { // 17

bool resizeDidWork = m_swapChain->createOrResize();

if (!resizeDidWork) {

return;

}

m_maybeResizeSwapChain = false;

}

const QSize outputPixelSize = m_swapChain->currentPixelSize();

m_rhi->beginFrame(m_swapChain); // 18

QRhiCommandBuffer *commandBuffer = m_swapChain->currentFrameCommandBuffer(); // 19

QRhiResourceUpdateBatch *updateBatch = m_rhi->nextResourceUpdateBatch(); // 20

if (m_initialUpdates) {

updateBatch->merge(m_initialUpdates);

m_initialUpdates->release();

m_initialUpdates = nullptr;

}

commandBuffer->beginPass(m_swapChain->currentFrameRenderTarget(), Qt::black, { 1.0, 0}, updateBatch); // 21

commandBuffer->setGraphicsPipeline(m_renderingPipeline); // 22

commandBuffer->setViewport({ 0, 0, float(outputPixelSize.width()), float(outputPixelSize.height()) });

commandBuffer->setShaderResources();

const QRhiCommandBuffer::VertexInput vertexInputBinding(m_vertexBuffer, 0); // 23

commandBuffer->setVertexInput(0, 1, &vertexInputBinding);

commandBuffer->draw(3); // 24

commandBuffer->endPass();

m_rhi->endFrame(m_swapChain); // 25

requestUpdate(); // 26

}

void HelloTriangle::releaseSwapChain() // 27

{

if (m_swapChain) {

m_swapChain->destroy();

}

} To break down the source code piece by piece, here is what happens:

1) For didactic purposes, we simplify the code by hardcoding the window’s surface type to OpenGLSurface. In a real world application, you most probably want to be more flexible and determine the surface type from the platform on which the application has been compiled

#if defined(Q_OS_WIN)

setSurfaceType(Direct3DSurface); ;

#elif defined(Q_OS_MACOS) || defined(Q_OS_IOS)

setSurfaceType(MetalSurface);

#elif defined(Q_VULKAN)

setSurfaceType(VulkanSurface);

#else

setSurfaceType(OpenGLSurface);

#endif 2) The destructor: It is a common practice to store all RHI resources in a list for convenient batch deletion. QRhi does not inherit from QRhiResources, so we handle its deletion separately.

3) A helper function to load shaders. A shader must be distributed in the QSB format – a container format to store (compiled) shader code for different graphics APIs. See also the next chapter.

4) Overrides the QWindow::exposeEvent method. When the window is first exposed, we need to initialize the rendering pipeline. This event is also triggered, when the user resizes the window. In this case, we may have to resize the swap chain later in the rendering code, which is indicated by the m_maybeResizeSwapChain flag.

5) Overrides QWindow::event: We listen to update and platform surface events. Whenever there is an update request, a render step is triggered; when the window is closed, we get notified, that the surface is about to get destroyed, and we are going to destroy the swap chain.

6) The initialization code; everything that needs to get done before the first call to render

7) Create the central QRhi object; depending on the platform, the initialization process is different. The RHI backend must match the surface type of the window. For the different initialization parameters refer to the documentation.

8) The swap chain connect RHI and the windows

9) To avoid deleting all ressources separately, we just append them to a release pool list

10) Initialize the swap chain: A sample count value above 1 enables Multi-Sample Antialiasing (MSAA). Don’t forget to set the window, otherwise the application will crash at startup.

11) The renderpass descriptor is essential for the render pipeline that we are going to create in a few more steps. When the pipeline renders the triangle, it must know what to do with the results, i.e. which buffers to use for storing color, depth and stencil values, and their respective types. In RHI, you create compatible descriptors from the swap chain or texture targets. After creating a descriptor, it must be set for the swap chain or the texture target, as RHI doesn’t handle this automatically. In this example, the pipeline will only render into a color buffer; there is no attached depth or stencil target.

12) RHI batches upload operations; here we create an initial upload batch that we are going to commit when rendering the first frame

13) here, we create a buffer to store the vertices for the triangle. The buffer is static, i.e. we don’t update the vertex data between draw calls.

14) From the outside, the vertex buffer contains just numbers. The pipeline needs to know about the structure of the buffer. In other words, we need a mapping between the data format and the attributes we declare in the vertex shader. As a reminder, this ist the triangle’s geometry:

static float vertexData[] = {

-0.5f, -0.5f, 0.0f,

0.5f, -0.5f, 0.0f,

0.0f, 0.5f, 0.0f

}; layout(location = 0) in vec3 position; // define vertex layout

QRhiVertexInputLayout vertexInputLayout;

vertexInputLayout.setBindings({ { 3 * sizeof(float) } });

vertexInputLayout.setAttributes({

{ 0, 0, QRhiVertexInputAttribute::Float3, 0 },

}); // geometry with position and texture coordinate (x, y, z, u, v)

static float vertexData[] = {

-0.5f, -0.5f, 0.0f, 0.0f, 0.0f,

0.5f, -0.5f, 0.0f, 1.0f, 0.0f,

0.0f, 0.5f, 0.0f, 0.5f, 1.0f,

};

// vertex shader input

layout(location = 0) in vec3 position;

layout(location = 1) in vec3 texCoord;

// define vertex layout in C++

QRhiVertexInputLayout vertexInputLayout;

vertexInputLayout.setBindings({ { 5 * sizeof(float) } });

vertexInputLayout.setAttributes({

{ 0, 0, QRhiVertexInputAttribute::Float3, 0 },

{ 0, 1, QRhiVertexInputAttribute::Float2, quint32(3 * sizeof(float))},

}); Now, each vertex consists of five numbers: x, y, z positions and the u, v texture coordinates. The vertex shader gets a second attribute with the location 1. Each invocation fetches five numbers from the buffer where the first three numbers form the input of the first attribute, and the next two numbers form the input of the second attribute.The binding point for both attributes is 0, as they are fed from the same buffer at binding point 0.

15) In the basic triangle example, the shaders don’t get any uniforms or textures as input. Nevertheless, the pipeline expects a shader resource binding object. Here, we just create one without setting any bindings on it.

16) Finally, we are prepared to create the pipeline. A pipeline must have at least one vertex shader and one fragment shader. The pipeline needs to know how the input is laid out (vertex attributes and shader resources like uniforms and textures), and where to render the results (render pass descriptor).

17) After initialization and setting up at least one rendering pipeline, we are ready to render the triangle. First, we check whether the swap chain needs to be resized (for example, when the user resizes the window and triggers an expose event)

18) Rendering a frame happens always between a call to beginFrame() and endFrame()

19) Modern rendering APIs collect all rendering commands in a rendering buffer, that is commited to the GPU in one batch

20) Here, we request a new update batch from RHI that can be used to update any dynamic buffers like uniform buffers. Before rendering the first frame, we merge the initial updates with the new update batch. We pass the update batch as a parameter to beginPass(), so that RHI can commit the batch.

21) A render pass is executed. A render pass renders to a specific target like a texture or the swap chain. In this case, we render to the window via the swap chain. We set the clear color to black and initialization values for the depth and stencil buffers to 1.0 and 0 respectively (note, that depth and stencil buffers do not exist in the example, but it is not possible to omit the values).

22) During one render pass, we may use multiple rendering pipelines to render to the same render target. For this example, we set the rendering pipeline responsible for the triangle.

23) Set the vertex input, and bind the vertex buffer to binding point 0

24) Draw three points

25) Request a new frame. Since the triangle is not animated, the line can be commented out. Qt ensures that update events triggered with requestUpdate() are in sync with the display vsync signal of your monitor, if possible.

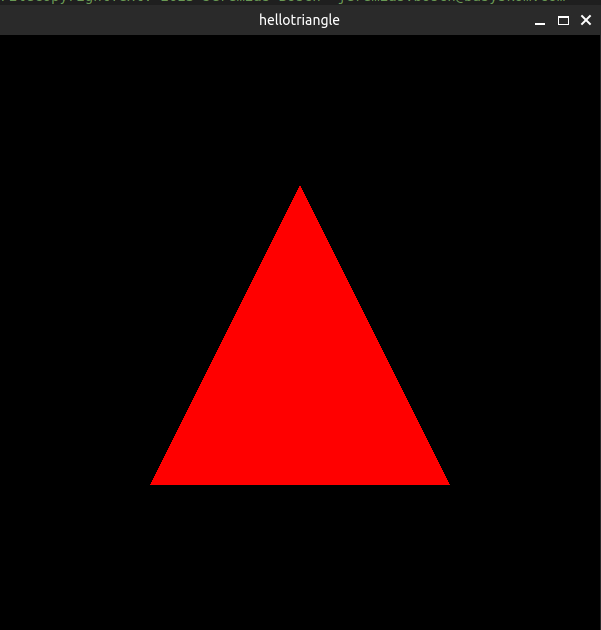

Et voilà, after executing these 25 steps, the application renders a triangle:

The shader code

The graphics pipeline must have at least two programmable shader stages: a vertex shader stage to process vertex geometry and a fragment shader stage to colorize the geometry. For the triangle, we are using a simple passthrough vertex shader that doesn’t do any transformations. In most applications, you most probably want to multiply the vertex with a model view matrix and a projection matrix.

#version 440

layout(location = 0) in vec3 position;

out gl_PerVertex { vec4 gl_Position; };

void main()

{

gl_Position = vec4(position, 1.0);

} #version 440

layout(location = 0) out vec4 fragColor;

void main()

{

fragColor = vec4(1.0, 0.0, 0.0, 1.0);

} qsb --glsl "100 es,120,150" --hlsl 50 --msl 12 -o hellotriangle.vert.qsb hellotriangle.vert

qsb --glsl "100 es,120,150" --hlsl 50 --msl 12 -o hellotriangle.frag.qsb hellotriangle.frag Conclusion

We have illustrated the process of setting up a graphics pipeline with RHI to render hardware-accelerated content on a variety of platform-specific APIs, including OpenGL, DirectX 11 & 12, Metal, and Vulkan. After reading the blog post, you should be able to upload and render geometry onto a window.

Of course, in real world applications you might want to render onto a QWidget or within a QtQuick application. Many shaders receive uniform buffers and textures as input.

But this is not all: RHI enables more complex tasks, such as utilizing tesselation, geometry or even compute shader stages, configuring stencil buffers and scissor rectangles, or rendering to offscreen buffers. In upcoming blog posts, we’ll delve deeper into these technologies.Please let us know in the comments, if you have already worked with RHI and in which use cases you are interested.